If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Statistics and probability

Course: statistics and probability > unit 12, hypothesis testing and p-values.

- One-tailed and two-tailed tests

- Z-statistics vs. T-statistics

- Small sample hypothesis test

- Large sample proportion hypothesis testing

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Video transcript

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

Lesson 3: probability distributions, overview section .

In this Lesson, we take the next step toward inference. In Lesson 2 , we introduced events and probability properties. In this Lesson, we will learn how to numerically quantify the outcomes into a random variable. Then we will use the random variable to create mathematical functions to find probabilities of the random variable.

One of the most important discrete random variables is the binomial distribution and the most important continuous random variable is the normal distribution. They will both be discussed in this lesson. We will also talk about how to compute the probabilities for these two variables.

- Distinguish between discrete and continuous random variables.

- Compute probabilities, cumulative probabilities, means and variances for discrete random variables.

- Identify binomial random variables and their characteristics.

- Calculate probabilities of binomial random variables.

- Describe the properties of the normal distribution.

- Find probabilities and percentiles of any normal distribution.

- Apply the Empirical rule.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

8.1.3: Distribution Needed for Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 10975

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Earlier in the course, we discussed sampling distributions. Particular distributions are associated with hypothesis testing. Perform tests of a population mean using a normal distribution or a Student's \(t\)-distribution. (Remember, use a Student's \(t\)-distribution when the population standard deviation is unknown and the distribution of the sample mean is approximately normal.) We perform tests of a population proportion using a normal distribution (usually \(n\) is large or the sample size is large).

If you are testing a single population mean, the distribution for the test is for means :

\[\bar{X} \sim N\left(\mu_{x}, \frac{\sigma_{x}}{\sqrt{n}}\right)\]

The population parameter is \(\mu\). The estimated value (point estimate) for \(\mu\) is \(\bar{x}\), the sample mean.

If you are testing a single population proportion, the distribution for the test is for proportions or percentages:

\[P' \sim N\left(p, \sqrt{\frac{p-q}{n}}\right)\]

The population parameter is \(p\). The estimated value (point estimate) for \(p\) is \(p′\). \(p' = \frac{x}{n}\) where \(x\) is the number of successes and n is the sample size.

Assumptions

When you perform a hypothesis test of a single population mean \(\mu\) using a Student's \(t\)-distribution (often called a \(t\)-test), there are fundamental assumptions that need to be met in order for the test to work properly. Your data should be a simple random sample that comes from a population that is approximately normally distributed. You use the sample standard deviation to approximate the population standard deviation. (Note that if the sample size is sufficiently large, a \(t\)-test will work even if the population is not approximately normally distributed).

When you perform a hypothesis test of a single population mean \(\mu\) using a normal distribution (often called a \(z\)-test), you take a simple random sample from the population. The population you are testing is normally distributed or your sample size is sufficiently large. You know the value of the population standard deviation which, in reality, is rarely known.

When you perform a hypothesis test of a single population proportion \(p\), you take a simple random sample from the population. You must meet the conditions for a binomial distribution which are: there are a certain number \(n\) of independent trials, the outcomes of any trial are success or failure, and each trial has the same probability of a success \(p\). The shape of the binomial distribution needs to be similar to the shape of the normal distribution. To ensure this, the quantities \(np\) and \(nq\) must both be greater than five \((np > 5\) and \(nq > 5)\). Then the binomial distribution of a sample (estimated) proportion can be approximated by the normal distribution with \(\mu = p\) and \(\sigma = \sqrt{\frac{pq}{n}}\). Remember that \(q = 1 – p\).

In order for a hypothesis test’s results to be generalized to a population, certain requirements must be satisfied.

When testing for a single population mean:

- A Student's \(t\)-test should be used if the data come from a simple, random sample and the population is approximately normally distributed, or the sample size is large, with an unknown standard deviation.

- The normal test will work if the data come from a simple, random sample and the population is approximately normally distributed, or the sample size is large, with a known standard deviation.

When testing a single population proportion use a normal test for a single population proportion if the data comes from a simple, random sample, fill the requirements for a binomial distribution, and the mean number of successes and the mean number of failures satisfy the conditions: \(np > 5\) and \(nq > 5\) where \(n\) is the sample size, \(p\) is the probability of a success, and \(q\) is the probability of a failure.

Formula Review

If there is no given preconceived \(\alpha\), then use \(\alpha = 0.05\).

Types of Hypothesis Tests

- Single population mean, known population variance (or standard deviation): Normal test .

- Single population mean, unknown population variance (or standard deviation): Student's \(t\)-test .

- Single population proportion: Normal test .

- For a single population mean , we may use a normal distribution with the following mean and standard deviation. Means: \(\mu = \mu_{\bar{x}}\) and \(\\sigma_{\bar{x}} = \frac{\sigma_{x}}{\sqrt{n}}\)

- A single population proportion , we may use a normal distribution with the following mean and standard deviation. Proportions: \(\mu = p\) and \(\sigma = \sqrt{\frac{pq}{n}}\).

- It is continuous and assumes any real values.

- The pdf is symmetrical about its mean of zero. However, it is more spread out and flatter at the apex than the normal distribution.

- It approaches the standard normal distribution as \(n\) gets larger.

- There is a "family" of \(t\)-distributions: every representative of the family is completely defined by the number of degrees of freedom which is one less than the number of data items.

- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Introduction

- Tabular methods

- Graphical methods

- Exploratory data analysis

- Events and their probabilities

- Random variables and probability distributions

- The binomial distribution

- The Poisson distribution

- The normal distribution

- Sampling and sampling distributions

- Estimation of a population mean

- Estimation of other parameters

- Estimation procedures for two populations

Hypothesis testing

Bayesian methods.

- Analysis of variance and significance testing

- Regression model

- Least squares method

- Analysis of variance and goodness of fit

- Significance testing

- Residual analysis

- Model building

- Correlation

- Time series and forecasting

- Nonparametric methods

- Acceptance sampling

- Statistical process control

- Sample survey methods

- Decision analysis

Our editors will review what you’ve submitted and determine whether to revise the article.

- Arizona State University - Educational Outreach and Student Services - Basic Statistics

- Princeton University - Probability and Statistics

- Statistics LibreTexts - Introduction to Statistics

- University of North Carolina at Chapel Hill - The Writing Center - Statistics

- Corporate Finance Institute - Statistics

- statistics - Children's Encyclopedia (Ages 8-11)

- statistics - Student Encyclopedia (Ages 11 and up)

- Table Of Contents

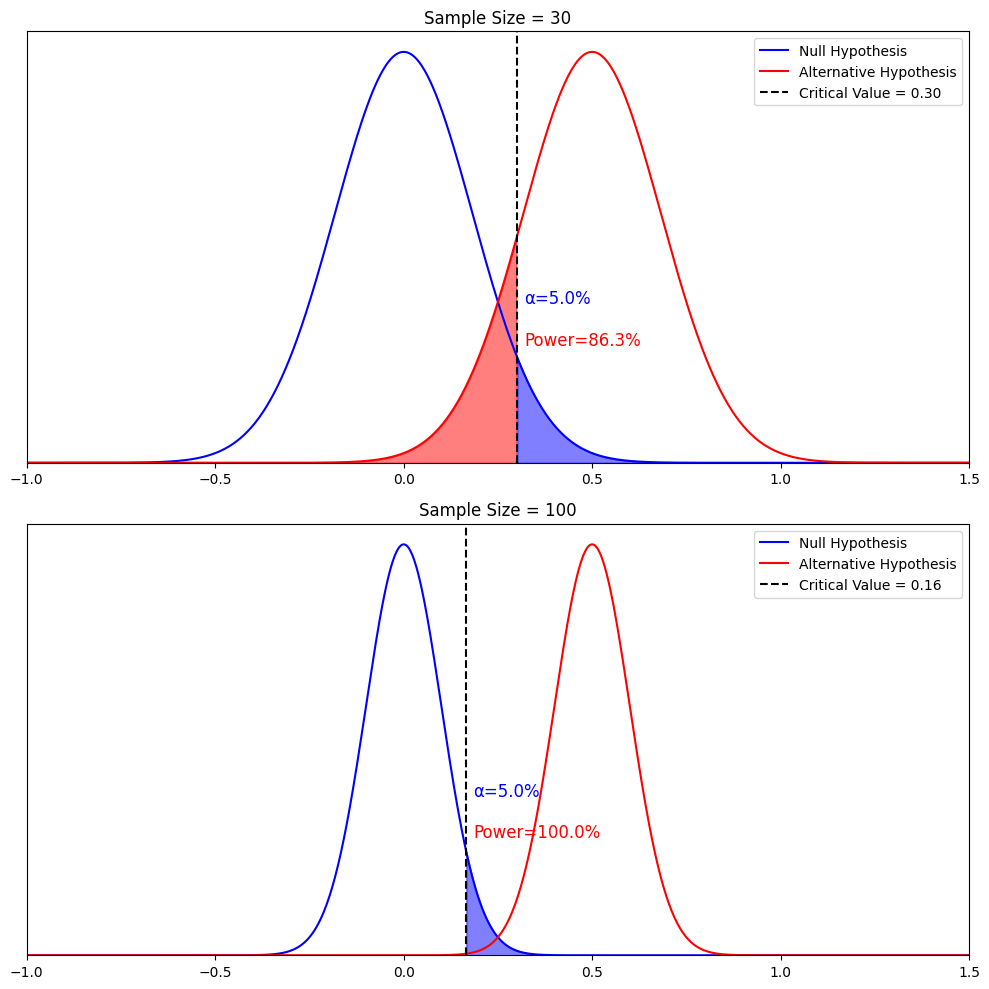

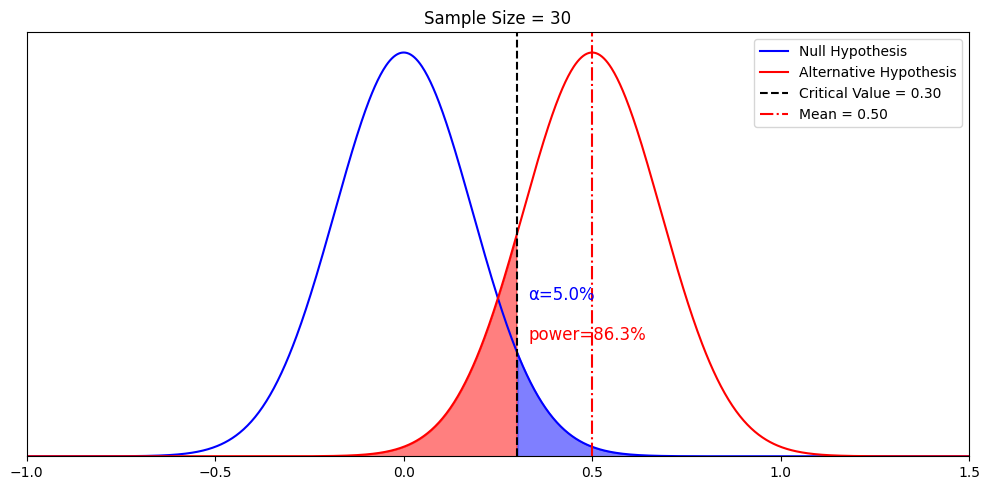

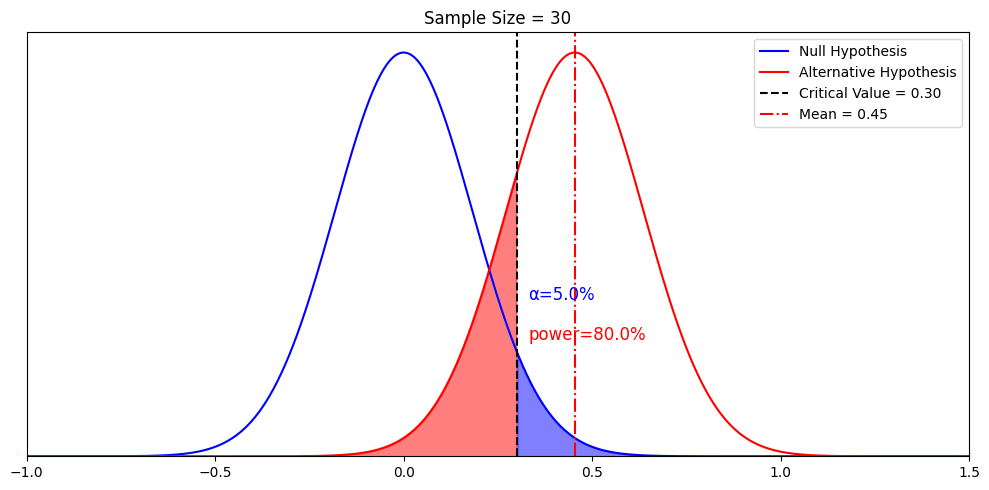

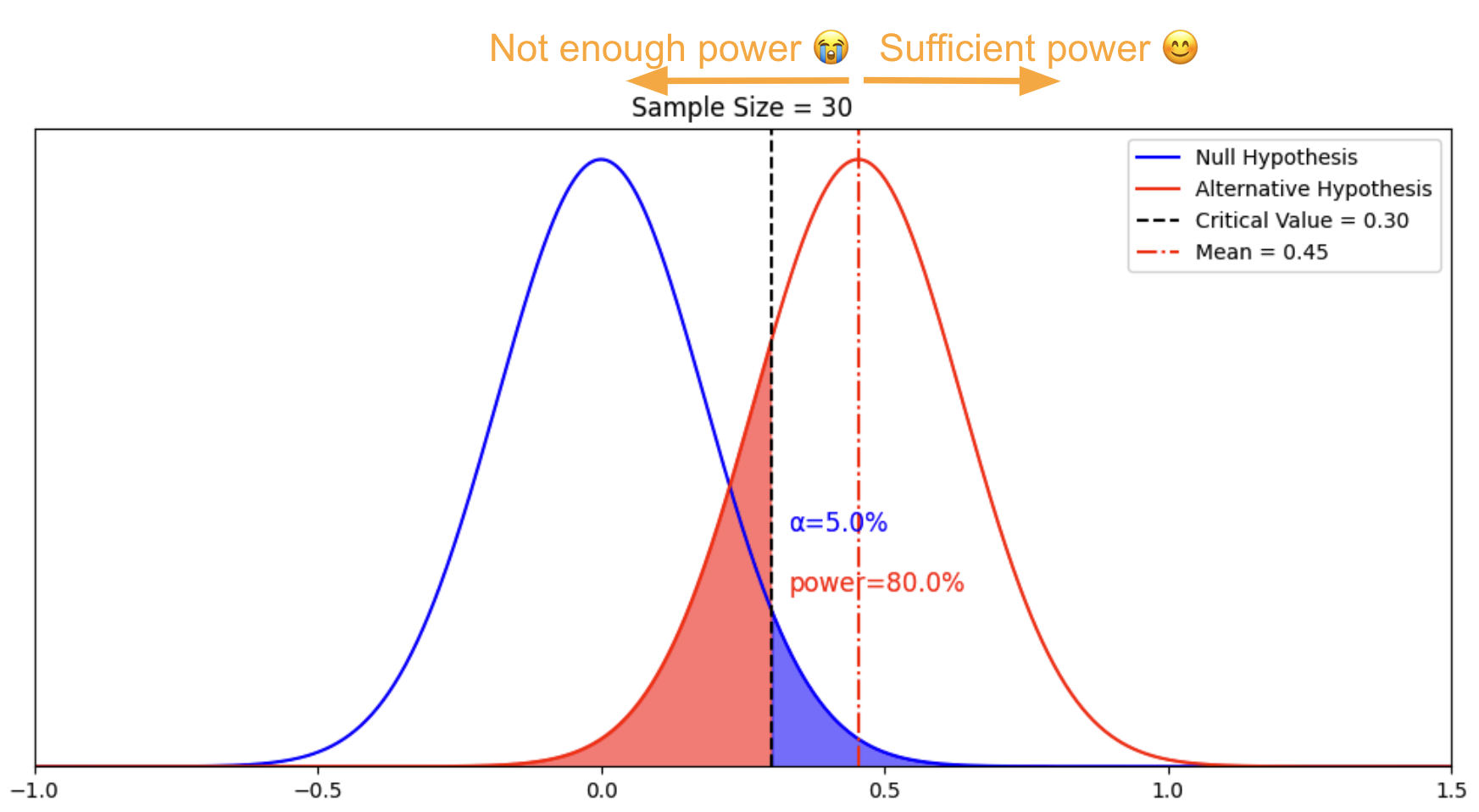

Hypothesis testing is a form of statistical inference that uses data from a sample to draw conclusions about a population parameter or a population probability distribution . First, a tentative assumption is made about the parameter or distribution. This assumption is called the null hypothesis and is denoted by H 0 . An alternative hypothesis (denoted H a ), which is the opposite of what is stated in the null hypothesis, is then defined. The hypothesis-testing procedure involves using sample data to determine whether or not H 0 can be rejected. If H 0 is rejected, the statistical conclusion is that the alternative hypothesis H a is true.

Recent News

For example, assume that a radio station selects the music it plays based on the assumption that the average age of its listening audience is 30 years. To determine whether this assumption is valid, a hypothesis test could be conducted with the null hypothesis given as H 0 : μ = 30 and the alternative hypothesis given as H a : μ ≠ 30. Based on a sample of individuals from the listening audience, the sample mean age, x̄ , can be computed and used to determine whether there is sufficient statistical evidence to reject H 0 . Conceptually, a value of the sample mean that is “close” to 30 is consistent with the null hypothesis, while a value of the sample mean that is “not close” to 30 provides support for the alternative hypothesis. What is considered “close” and “not close” is determined by using the sampling distribution of x̄ .

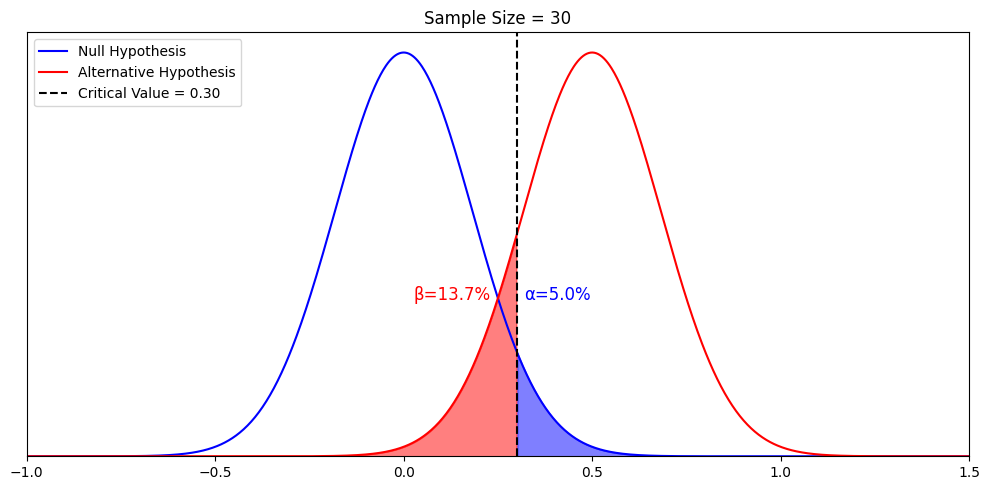

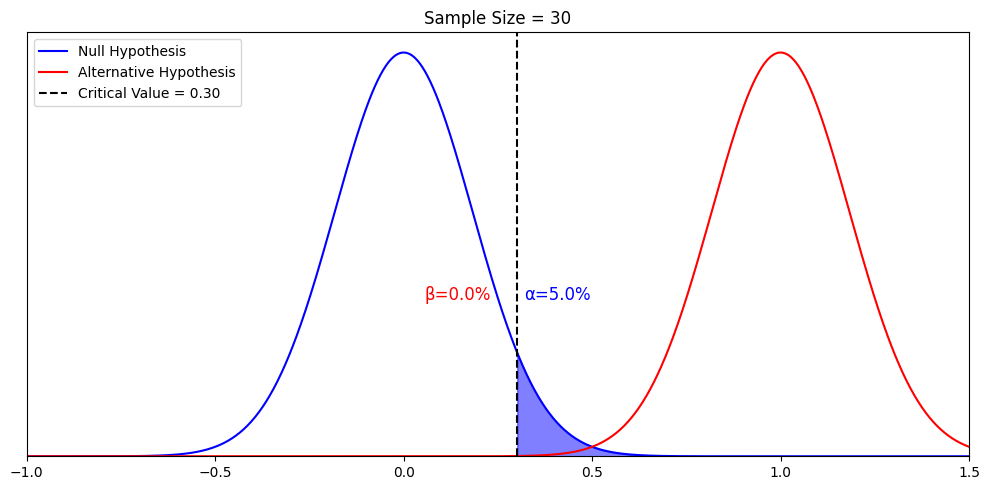

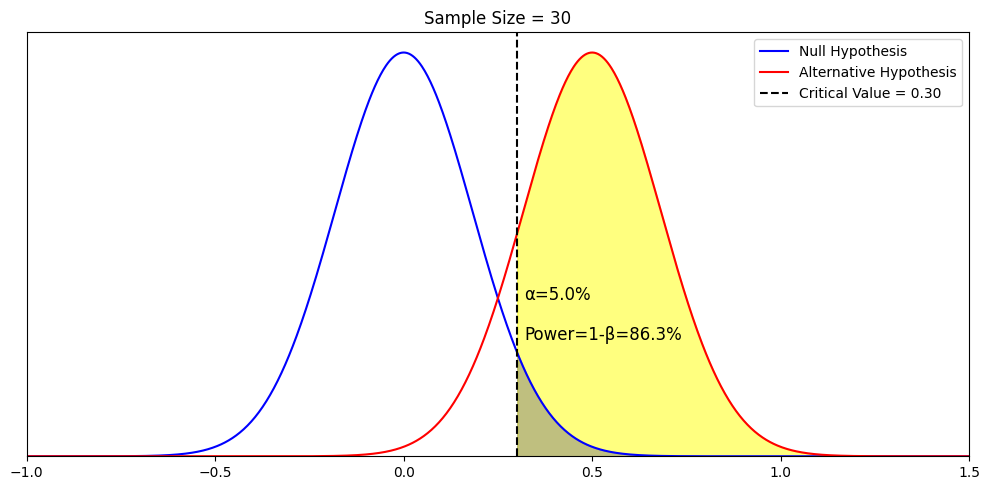

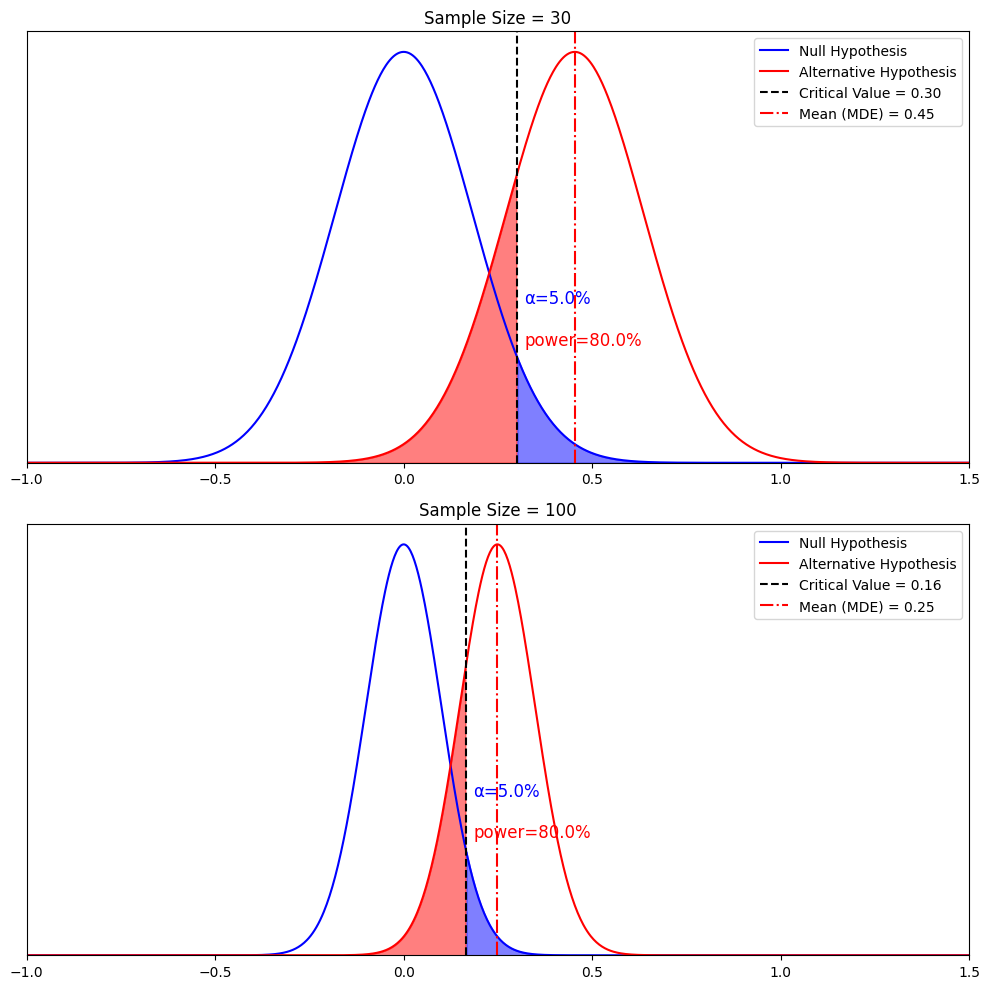

Ideally, the hypothesis-testing procedure leads to the acceptance of H 0 when H 0 is true and the rejection of H 0 when H 0 is false. Unfortunately, since hypothesis tests are based on sample information, the possibility of errors must be considered. A type I error corresponds to rejecting H 0 when H 0 is actually true, and a type II error corresponds to accepting H 0 when H 0 is false. The probability of making a type I error is denoted by α, and the probability of making a type II error is denoted by β.

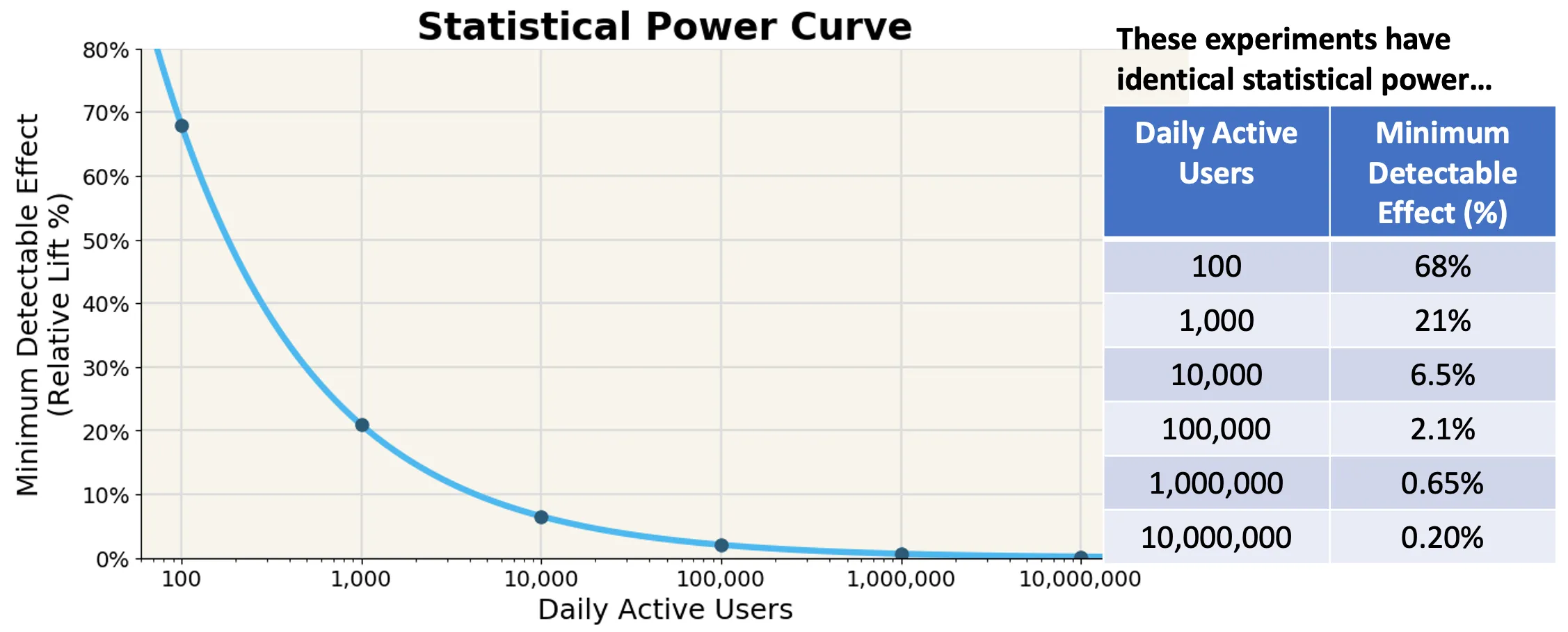

In using the hypothesis-testing procedure to determine if the null hypothesis should be rejected, the person conducting the hypothesis test specifies the maximum allowable probability of making a type I error, called the level of significance for the test. Common choices for the level of significance are α = 0.05 and α = 0.01. Although most applications of hypothesis testing control the probability of making a type I error, they do not always control the probability of making a type II error. A graph known as an operating-characteristic curve can be constructed to show how changes in the sample size affect the probability of making a type II error.

A concept known as the p -value provides a convenient basis for drawing conclusions in hypothesis-testing applications. The p -value is a measure of how likely the sample results are, assuming the null hypothesis is true; the smaller the p -value, the less likely the sample results. If the p -value is less than α, the null hypothesis can be rejected; otherwise, the null hypothesis cannot be rejected. The p -value is often called the observed level of significance for the test.

A hypothesis test can be performed on parameters of one or more populations as well as in a variety of other situations. In each instance, the process begins with the formulation of null and alternative hypotheses about the population. In addition to the population mean, hypothesis-testing procedures are available for population parameters such as proportions, variances , standard deviations , and medians .

Hypothesis tests are also conducted in regression and correlation analysis to determine if the regression relationship and the correlation coefficient are statistically significant (see below Regression and correlation analysis ). A goodness-of-fit test refers to a hypothesis test in which the null hypothesis is that the population has a specific probability distribution, such as a normal probability distribution. Nonparametric statistical methods also involve a variety of hypothesis-testing procedures.

The methods of statistical inference previously described are often referred to as classical methods. Bayesian methods (so called after the English mathematician Thomas Bayes ) provide alternatives that allow one to combine prior information about a population parameter with information contained in a sample to guide the statistical inference process. A prior probability distribution for a parameter of interest is specified first. Sample information is then obtained and combined through an application of Bayes’s theorem to provide a posterior probability distribution for the parameter. The posterior distribution provides the basis for statistical inferences concerning the parameter.

A key, and somewhat controversial, feature of Bayesian methods is the notion of a probability distribution for a population parameter. According to classical statistics, parameters are constants and cannot be represented as random variables. Bayesian proponents argue that, if a parameter value is unknown, then it makes sense to specify a probability distribution that describes the possible values for the parameter as well as their likelihood . The Bayesian approach permits the use of objective data or subjective opinion in specifying a prior distribution. With the Bayesian approach, different individuals might specify different prior distributions. Classical statisticians argue that for this reason Bayesian methods suffer from a lack of objectivity. Bayesian proponents argue that the classical methods of statistical inference have built-in subjectivity (through the choice of a sampling plan) and that the advantage of the Bayesian approach is that the subjectivity is made explicit.

Bayesian methods have been used extensively in statistical decision theory (see below Decision analysis ). In this context , Bayes’s theorem provides a mechanism for combining a prior probability distribution for the states of nature with sample information to provide a revised (posterior) probability distribution about the states of nature. These posterior probabilities are then used to make better decisions.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

4. Probability, Inferential Statistics, and Hypothesis Testing

4a. probability and inferential statistics, video lesson.

In this chapter, we will focus on connecting concepts of probability with the logic of inferential statistics. “The whole problem with the world is that fools and fanatics are always so certain of themselves, and wiser people so full of doubts.” — Bertrand Russel (1872-1970)

These notable quotes represent why probability is critical for a basic understanding of scientific reasoning.

“Medicine is a science of uncertainty and an art of probability.” — William Osler (1849–1919) In many ways, the process of postsecondary education is all about instilling a sense of doubt and wonder, and the ability to estimate probabilities . As a matter of fact, that essentially sums up the entire reason why you are in this course. So let us tackle probability .

We will be keeping our coverage of probability to a very simple level, because the introductory statistics we will cover rely on only simple probability . That said, I encourage you to read further on compound and conditional probabilities , because they will certainly make you smarter at real-life decision making. We will briefly touch on examples of how bad people can be at using probability in real life, and we will then address what probability has to do with inferential statistics. Finally, I will introduce you to the central limit theorem . This is probably one of the heftiest math concepts in the course, but worry not. Its implications are easy to learn, and the concepts behind it can be demonstrated empirically in the interactive exercises.

First, we need to define probability . In a situation where several different outcomes are possible, the probability of any specific outcome is a fraction or proportion of all possible outcomes. Another way of saying that is this. If you wish to answer the question, “What are the chances that outcome would have happened?”, you can calculate the probability as the ratio of possible successful outcomes to all possible outcomes.

Concept Practice: define probability

People often use the rolling of dice as examples of simple probability problems.

If you were to roll one typical die, which has a number on each side from 1 to 6, then the simple probability of rolling a 1 would be 1/6. There are six possible outcomes, but only 1 of them is the successful outcome, that of rolling a 1.

Concept Practice: calculate probability

Another common example used to introduce simple probability is cards. In a standard deck of casino cards, there are 52 cards. There are 4 aces in such a deck of cards (Aces are the “1” card, and there is 1 in each suit – hearts, spades, diamonds and clubs.)

If you were to ask the question “what is the probability that a card drawn at random from a deck of cards will be an ace?”, and you know all outcomes are equally likely, the probability would be the ratio of the number of times one could draw and ace divided by the number of all possible outcomes. In this example, then, the probability would be 4/52. This ratio can be converted into a decimal: 4 divided by 52 is 0.077, or 7.7%. (Remember, to turn a decimal to a percent, you need to move the decimal place twice to the right.)

Probability seems pretty straightforward, right? But people often misunderstand probability in real life. Take the idea of the lucky streak, for example. Let’s say someone is rolling dice and they get 4 6’s in a row. Lots of people might say that’s a lucky streak and they might go as far as to say they should continue, because their luck is so good at the moment! According to the rules of probability , though, the next die roll has a 1/6 chance of being a 6, just like all the others. True, the probability of a 4-in-a-row streak occurring is fairly slim: 1/6 x 1/6 x 1/6 x 1/6. But the fact is that this rare event does not predict future events (unless it is an unfair die!). Each time you roll a die, the probability of that event remains the same. That is what the human brain seems to have a really hard time accepting.

Concept Practice: lucky streak

When someone makes a prediction attached to a certain probability (e.g. there is only a 1% chance of an earthquake in the next week), and then that event occurs in spite of that low probability estimate (e.g. there is actually an earthquake the day after the prediction was made)… was that person wrong? No, not really, because they allowed for the possibility. Had they said there was a 0% chance, they would have been wrong.

Probabilities are often used to express likelihood of outcomes under conditions of uncertainty. Like Bertrand Russell said, wise people rarely speak in terms of certainties. Because people so often misunderstand probability , or find irrational actions so hard to resist despite some understanding of probability , decision making in the realm of sciences needs to be designed to combat our natural human tendencies. What we are discussing now in terms of how to think about and calculate probabilities will form a core component of our decision-making framework as we move forward in the course.

Now, let’s take a look at how probability is used in statistics.

Concept Practice: area under normal curve as probability

We saw that percentiles are expressions of area under a normal curve. Areas under the curve can be expressed as probability , too. For example, if we say the 50th percentile for IQ is 100, that can be expressed as: “If I chose a person at random, there is a 50% chance that they will have an IQ score below 100.”

If we find the 84th percentile for IQ is 115 there is another way to say that “If I chose a person at random, there is an 84% chance that they will have an IQ score below 115.”

Concept Practice: find percentiles

Any time you are dealing with area under the normal curve, I encourage you to express that percentage in terms of probabilities . That will help you think clearly about what that area under the curve means once we get into the exercise of making decisions based on that information.

Concept Practice: interpreting percentile as probability

Probabilities , of course, range from 0 to 1 as proportions or fractions, and from 0% to 100% when expressed in percentage terms. In inferential statistics, we often express in terms of probability the likelihood that we would observe a particular score under a given normal curve model.

Concept Practice: applying probability

Although I encourage you to think of probabilities as percentages, the convention in statistics is to report to the probability of a score as a proportion, or decimal. The symbol used for “probability of score” is p . In statistics, the interpretation of “ p ” is a delicate subject. Generations of researchers have been lazy in our understanding of what “ p ”: tells us, and we have tended to over-interpret this statistic. As we begin to work with “ p ”, I will ask you to memorize a mantra that will help you report its meaning accurately. For now, just keep in mind that most psychologists and psychology students still make mistakes in how they express and understand the meaning of “ p ” values. This will take time and effort to fix, but I am confident that your generation will learn to do better at a precise and careful understanding of what statistics like “ p ” tell us… and what they do not.

To give you a sense of what a statement of p < .05 might mean, let us think back to our rat weights example.

If I were to take a rat from our high-grain food group and place it on the distribution of untreated rat weights, and if it placed at Z = .9, we could look at the area under the curve from that point and above. That would tell us how likely it would be to observe such a heavy rat in the general population of nontreated rats — those that eat a normal diet.

Think of it this way. When we select a rat from our treatment group (those that ate the grain-heavy diet), and it is heavier than the average for a nontreated rat, there are two possible explanations for that observation. One is that the diet made him that way. As a scientist whose hypothesis is that a grain-heavy diet will make the rats weigh more, I’m actually motivated to interpret the observation that way. I want to believe this event is meaningful, because it is consistent with my hypothesis! But the other possibility is that, by random chance, we picked a rat that was heavy to begin with. There are plenty of rats in the distribution of nontreated rats that were at least that heavy. So there is always some probability that we just randomly selected a heavier rat. In this case, if my treated rat’s weight was less than one standard deviation above the mean, we saw in the chapter on normal curves that the probability of observing a rat weight that high or higher in the nontreated population was about 18%. That is not so unusual. It would not be terribly surprising if that outcome were simply the result of random chance rather than a result of the diet the rat had been eating.

If, on the other hand, the rat we measured was 2.5 standard deviations above the mean, the tail probability beyond that Z-score would be vanishingly small.

The probability of observing such a rat weight in the nontreated population is very low, so it is far less likely that observation can be accounted for just by random chance alone. As we accumulate more evidence, the probability they could have come at random from the nontreated population will weigh into our decision making about whether the grain-heavy diet indeed causes rats to become heavier. This is the way probabilities are used in the process of hypothesis testing , the logic of inferential statistics that we will look at soon.

Concept Practice: statistics as probability

Now that you have seen the relevance of probability to the decision making process that comprises inferential statistics, we have one more major learning objective: to become familiar with the central limit theorem .

However, before we get to the central limit theorem , we need to be clear on the distinction between two concepts: sample and population . In the world of statistics, the population is defined as all possible individuals or scores about which we would ideally draw conclusions. When we refer to the characteristics, or parameters, that describe a population , we will use Greek letters. A sample is defined as the individuals or scores about which we are actually drawing conclusions. When we refer to the characteristics, or statistics, that describe a sample , we will use English letters.

It is important to understand the difference between a population and a sample , and how they relate to one another, in order to comprehend the central limit theorem and its usefulness for statistics. From a population we can draw multiple samples . The larger sample , the more closely our sample will represent the population .

Think of a Venn diagram. There is a circle that is a population . Inside that large circle, you can draw an infinite number of smaller circles, each of which represents a sample .

The larger that inner circle, the more of the population it contains, and thus the more representative it is.

Let us take a concrete example. A population might be the depression screening scores for all current postsecondary students in Canada. A sample from that population might be depression screening scores for 500 randomly selected postsecondary students from several institutions across Canada. That seems a more reasonable proportion of the two million students in the population than a sample that contains only 5 students. The 500 student sample has a better shot at adequately representing the entire population than does the 5 student sample , right? You can see that intuitively… and once you learn the central limit theorem , you will see the mathematical demonstration of the importance of sample size for representing the population .

To conduct the inferential statistics we are using in this course, we will be using the normal curve model to estimate probabilities associated with particular scores. To do that, we need to assume that data are normally distributed. However, in real life, our data are almost never actually a perfect match for the normal curve.

So how can we reasonably make the normality assumption? Here’s the thing. The central limit theorem is a mathematical principle that assures us that the normality assumption is a reasonable one as long as we have a decent sample size.

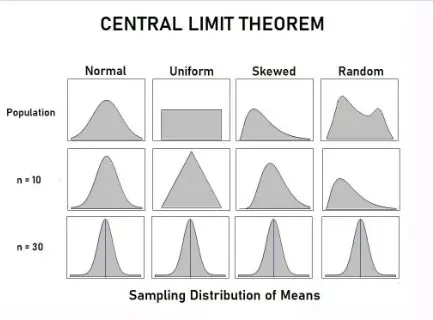

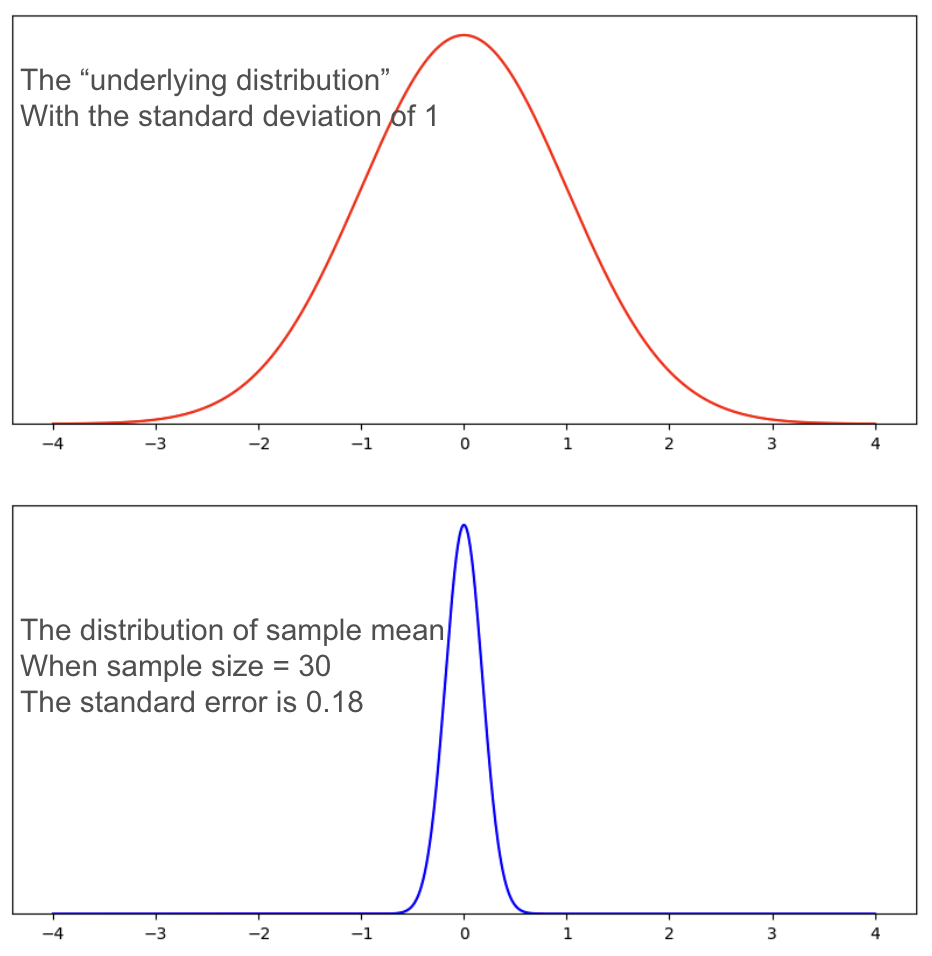

According to the theorem, as long as we take a decent-sized sample , if we took many samples (10,000) of large enough size (30+) and took the mean each time, the distribution of those means w ill approach a normal distribution, even if the scores from each sample are not normally distributed. To see this for yourself, take a look at the histograms shown on the right. The top histogram came from taking from a population 10,000 samples of just one score each, and plotting them on a histogram. See how it has a flat, or rectangular shape? No way we could call that a shape approximating a normal curve. Next is a histogram that came from taking the means of 10,000 samples , if each sample included 4 scores. Looks slightly better, but still not very convincing. With a sample size of 7, it looks a bit better. Once our sample size is 10, we at least have something pretty close. Mathematically speaking, as long as the sample size is no smaller than 30, then the assumption of normality holds. The other way we can reasonably make the normality assumption is if we know the population itself follows a normal curve. In that case, even if individual samples do not have a nice shaped histogram, that is okay, because the normality assumption is one apply to the population in question, not to the sample itself.

Now, you can play around with an online demonstration so you can really convince yourself that the central limit theorem works in practice. The goal here is to see what sample size is sufficient to generate a histogram that closely approximates a normal curve. And to trust that even if real-life data look wonky, the normal curve may still be a reasonable model for data analysis for purposes of inference.

Concept Practice: Central Limit Theorem

4b. hypothesis testing.

We are finally ready for your first introduction to a formal decision making procedure often used in statistics, known as hypothesis testing .

In this course, we started off with descriptive statistics, so that you would become familiar with ways to summarize the important characteristics of datasets. Then we explored the concepts standardizing scores, and relating those to probability as area under the normal curve model. With all those tools, we are now ready to make something!

Okay, not furniture, exactly, but decisions.

We are now into the portion of the course that deals with inferential statistics. Just to get you thinking in terms of making decisions on the basis of data, let us take a slightly silly example. Suppose I have discovered a pill that cures hangovers!

Well, it greatly lessened symptoms of hangover in 10 of the 15 people I tested it on. I am charging 50 dollars per pill. Will you buy it the next time you go out for a night of drinking? Or recommend it to a friend? … If you said yes, I wonder if you are thinking very critically? Should we think about the cost-benefit ratio here on the basis of what information you have? If you said no, I bet some of the doubts I bring up popped to your mind as well. If 10 out of 15 people saw lessened symptoms, that’s 2/3 of people – so some people saw no benefits. Also, what does “greatly lessened symptoms of hangover” mean? Which symptoms? How much is greatly? Was the reduction by two or more standard deviations from the mean? Or was it less than one standard deviation improvement? Given the cost of 50 dollars per pill, I have to say I would be skeptical about buying it without seeing some statistics!

On this list is a preview of the basic concepts to which you will be introduced as we go through the rest of this chapter.

Hypothesis Testing Basic Concepts

- Null Hypothesis

- Research Hypothesis (alternative hypothesis)

- Statistical significance

- Conventional levels of significance

- Cutoff sample score (critical value)

- Directional vs. non-directional hypotheses

- One-tailed and two-tailed tests

- Type I and Type II errors

You can see that there are lots of new concepts to master. In my experience, each concept makes the most sense in context, within its place in the hypothesis testing workflow. We will start with defining our null and research hypotheses , then discuss the levels of statistical significance and their conventional usage. Next, we will look at how to find the cutoff sample score that will form the critical value for our decision criterion. We will look at how that differs for directional vs. non-directional hypotheses , which will lend themselves to one- or two-tailed tests , respectively.

The hypothesis testing procedure, or workflow, can be broken down into five discrete steps.

Steps of Hypothesis Testing

- Restate question as a research hypothesis and a null hypothesis about populations.

- Determine characteristics of the comparison distribution.

- Determine the cutoff sample score on the comparison distribution at which the null hypothesis should be rejected.

- Determine your sample’s score on the comparison distribution.

- Decide whether to reject the null hypothesis.

These steps are something we will be using pretty much the rest of the semester, so it is worth memorizing them now. My favourite approach to that is to create a mnemonic device. I recommend the following key words from which to form your mnemonic device: hypothesis, characteristics, cutoff, score, and decide. Not very memorable? Try association those with more memorable words that start with the same letter or sound. How about “ Happy Chickens Cure Sad Days .” Or you can put the words into a mnemonic device generator on the internet and get something truly bizarre. I just tried one and got “ Hairless Carsick Chewbacca Slapped Demons ”. Another good one: “ Hamlet Chose Cranky Sushi Drunkenly .” Anyway, you play around with it or brainstorm until you hit upon one that works for you. Who knew statistics could be this much fun!

The first step in hypothesis testing is always to formulate hypotheses. The first rule that will help you do so correctly, is that hypotheses are always about populations . We study samples in order to make conclusions about populations, so our predictions should be about the populations themselves. First, we define population 1 and population 2. Population 1 is always defined as people like the ones in our research study, the ones we are truly interested in. Population 2 is the comparison population , the status quo to which we are looking to compare our research population . Now, remember, when referring to populations , we always use Greek letters. So if we formulate our hypotheses in symbols, we need to use Greek letters.

It is a good idea to state our hypotheses both in symbols and in words. We need to make them specific and disprovable. If you follow my tips, you will have it down with just a little practice.

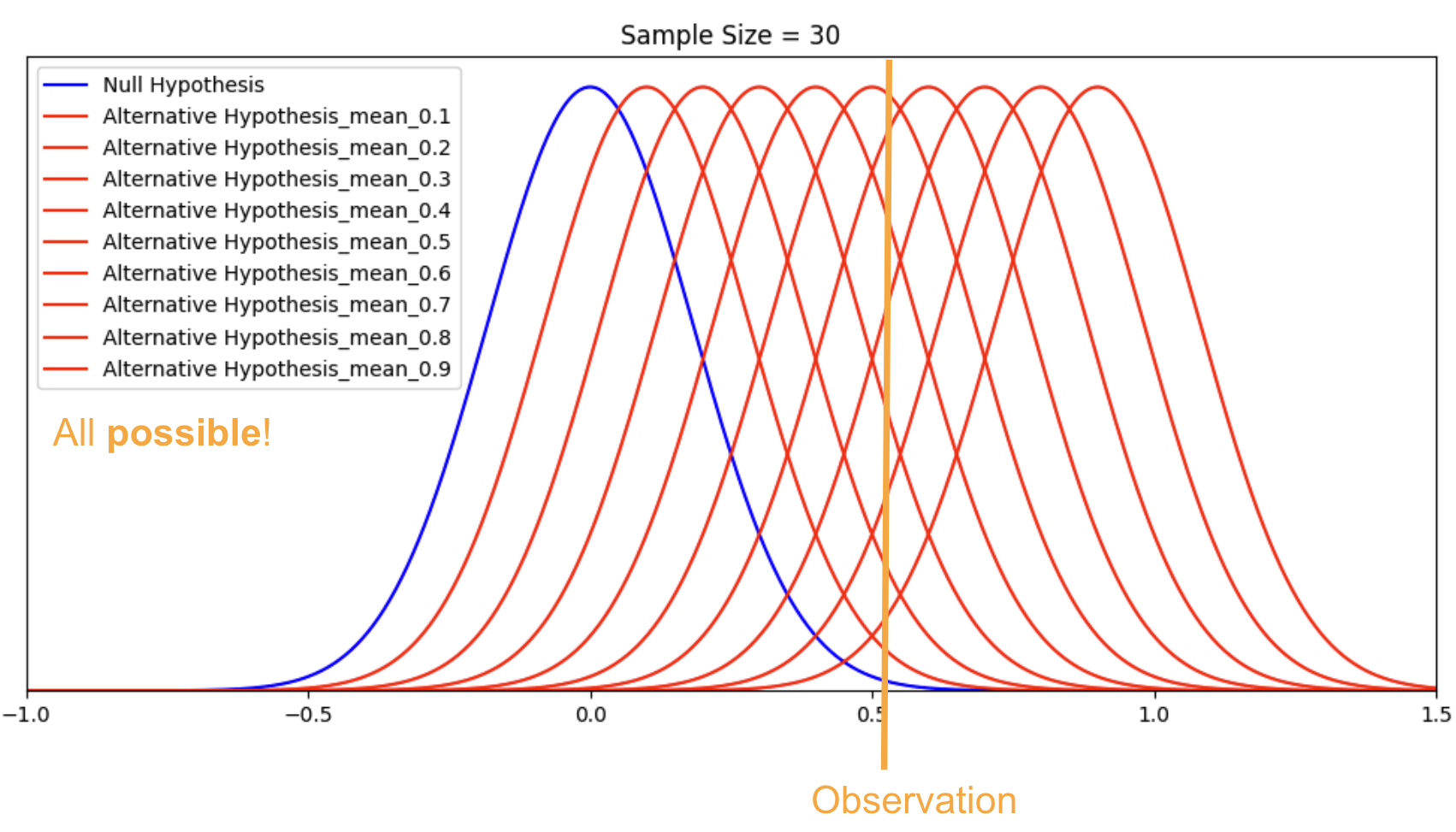

We need to state two hypotheses. First, we state the research hypothesis , which is sometimes referred to as the alternative hypothesis. The research hypothesis (often called the alternative hypothesis) is a statement of inequality, or that Something happened! This hypothesis makes the prediction that the population from which the research sample came is different from the comparison population . In other words, there is a really high probability that the sample comes from a different distribution than the comparison one.

The null hypothesis , on the other hand, is a statement of equality, or that nothing happened. This hypothesis makes the prediction that the population from which sample came is not different from the comparison population . We set up the null hypothesis as a so-called straw man, that we hope to tear down. Just remember, null means nothing – that nothing is different between the populations .

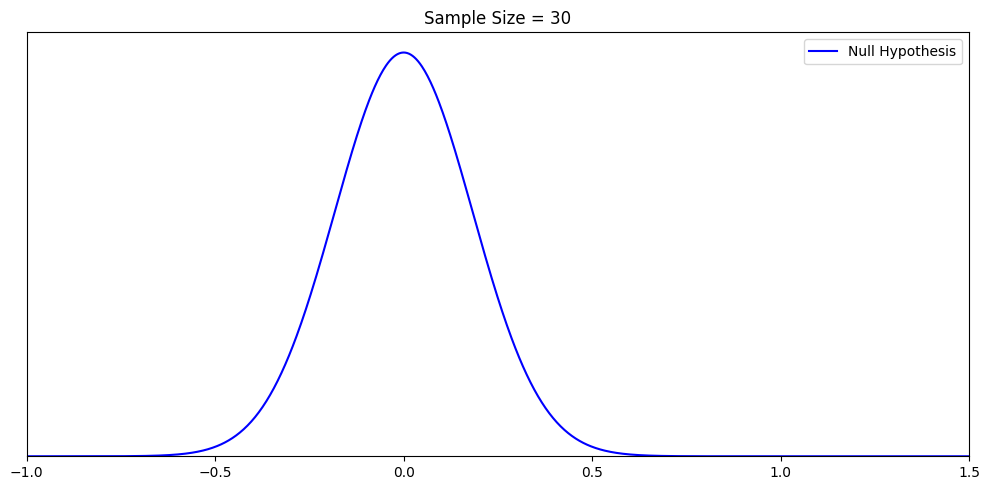

Step two of hypothesis testing is to determine the characteristics of the comparison distribution. This is where our descriptive statistics, the mean and standard deviation, come in. We need to ensure our normal curve model to which we are comparing our research sample is mapped out according to the particular characteristics of the population of comparison, which is population 2.

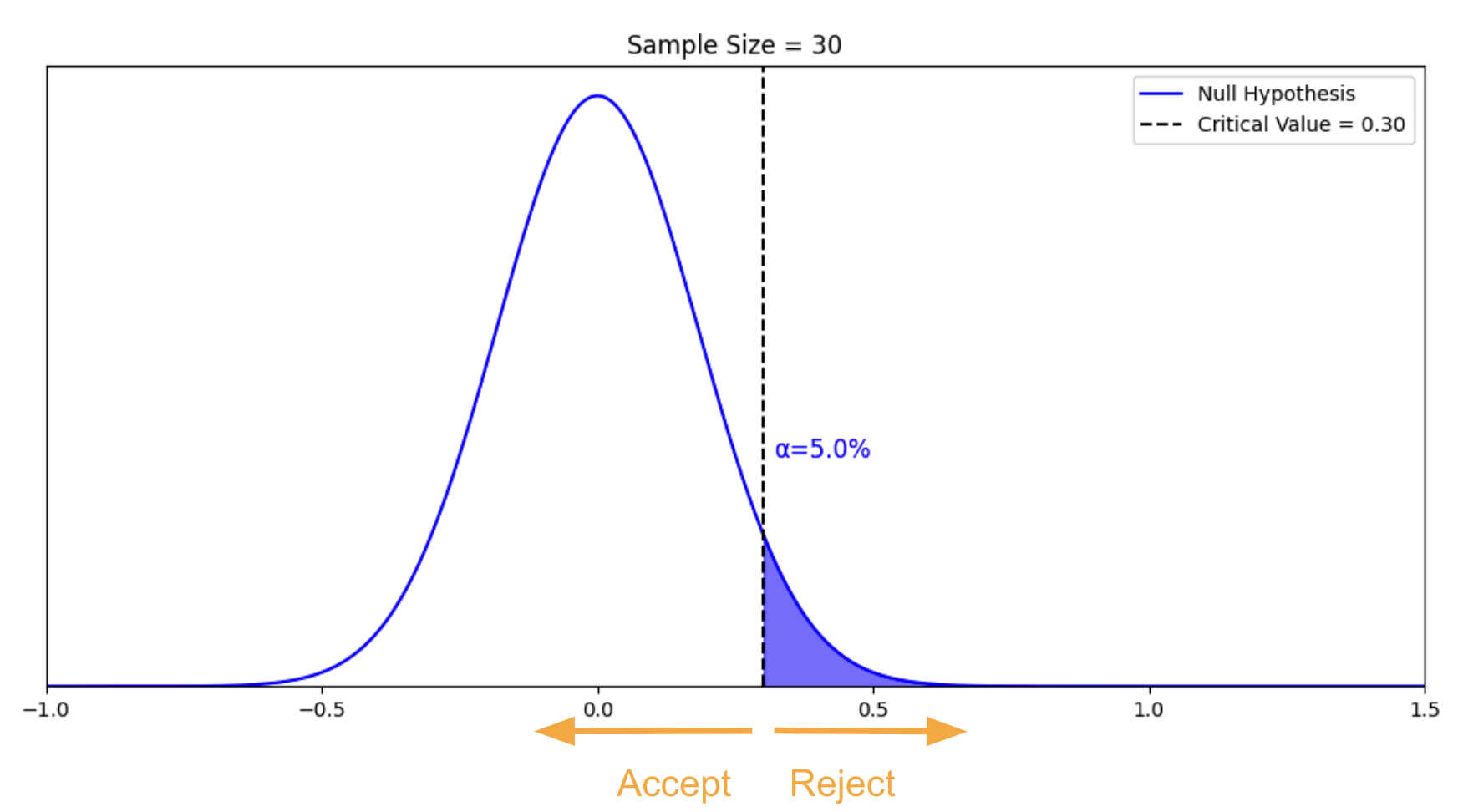

Next it is time to set our decision rule. Step 3 is to determine the cutoff sample score , which is derived from two pieces of information. The first is the conventional significance level that applies. By convention, the probability level that we are willing to accept as a risk that the score from our research sample might occur by random chance within the comparison distribution is set to one of three levels: 10%, 5%, or 1%. The most common choice of significance level is 5%. Typically the significance level will be provided to you in the problem for your statistics courses, but if it is not, just default to a significance level of .05. Sometimes researchers will choose a more conservative significance level , like 1%, if they are particularly risk averse. If the researcher chooses a 10% significance level , they are likely conducting a more exploratory study, perhaps a pilot study, and are not too worried about the probability that the score might be fairly common under the comparison distribution.

The second piece of information we need to know in order to find our cutoff sample score is which tail we are looking at. Is this a directional hypothesis , and thus one-tailed test ? Or a non-directional hypothesis , and thus a two-tailed test ? This depends on the research hypothesis from step 1. Look for directional keywords in the problem. If the researcher prediction involves words like “greater than” or “larger than”, this signals that we should be doing a one-tailed test and that our cutoff sample score should be in the top tail of the distribution. If the researcher prediction involves words like “lower than” or “smaller than”, this signals that we should be doing a one-tailed test and that our cutoff sample score should be in the bottom tail of the distribution. If the prediction is neutral in directionality, and uses a word like “different”, that signals a non-directional hypothesis . In that case, we would need to use a two-tailed test, and our cutoff scores would need to be indicated on both tails of the distribution. To do that, we take our area under the curve, which matches our significance level , and split it into both tails.

For example, if we have a two-tailed test with a .05 significance level , then we would split the 5% area under the curve into the two tails, so two and a half percent in each tail.

Concept Practice: deciding on one-tailed vs. two-tailed tests

We can find the Z-score that forms the border of the tail area we have identified based on significance level and directionality by looking it up in a table or an online calculator . I always recommend mapping this cutoff score onto a drawing of the comparison distribution as shown above. This should help you visualize the setup of the hypothesis test clearly and accurately.

Concept Practice: inference through hypothesis testing

The next step in the hypothesis testing procedure is to determine your sample’s score on the comparison distribution. To do this, we calculate a test statistic from the sample raw score, mark it on the comparison distribution, and determine whether it falls in the shaded tail or not. In reality, we would always have a sample with more than one score in it. However, for the sake of keeping our test statistic formula a familiar one, we will use a sample size of one. We will use our Z-score formula to translate the sample’s raw score into a Z-score – in other words, we will figure out how many standard deviations above or below the comparison distribution’s mean the sample score is.

Finally, it’s time to decide whether to reject the null hypothesis . This decision is based on whether our sample’s data point was more extreme than the cutoff score , in other words, “did it fall in the shaded tail?” If the sample score is more extreme than the cutoff score , then we must reject the null hypothesis. Our research hypothesis is supported! (Not proven… remember, there is still some probability that that score could have occurred randomly within the comparison distribution.) But it is sound to say that it appears quite likely that the population from which our sample came is different from the comparison population. Another way to express this decision is to say that the result was statistically significant , which is to say that there is less than a 5% chance of this result occurring randomly within the comparison distribution (here I just filled in the blank with the significance level).

What if the research sample score did not fall in the shaded tail? In the case that the sample score is less extreme than the cutoff score , then our research hypothesis is not supported. We do not reject the null hypothesis . It appears that the population from which our sample came is not different from the comparison population . Note that we do not typically express this result as “accept the null hypothesis” or “we have proved the null hypothesis”. From this test, we do not have evidence that the null hypothesis is correct, rather we simply did not have enough evidence to reject it. Another way to express this decision is to say that the result was not statistically significant , which is to say that there is more than a 5% chance of this result occurring randomly within the comparison distribution (here I just used the most common significance level ).

Concept Practice: interpreting conclusions of hypothesis tests

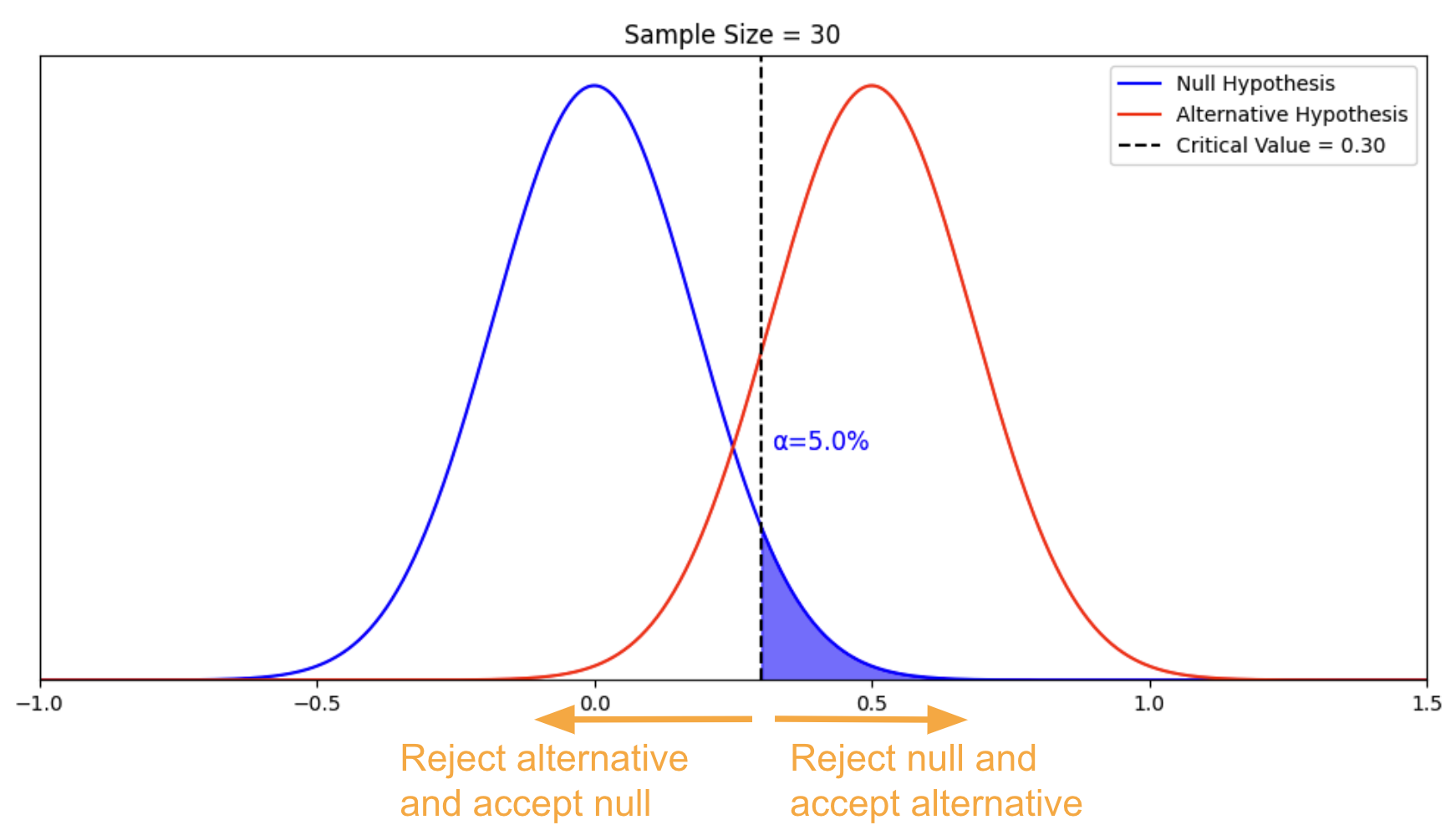

So we have described the hypothesis testing process from beginning to end. The whole process of null hypothesis testing can feel like pretty tortured logic at first. So let us zoom out, and look at the whole process another way. Essentially what we are seeking to do in such a hypothesis test is to compare two populations . We want to find out if the populations are distinct enough to confidently state that there is a difference between population 1 and population 2. In our example, we wanted to know if the population of people using a new medication, population 1, sleep longer than the population of people who are not using that new medication, population 2. We ended up finding that the research evidence to hand suggests population 1’s distribution is distinct enough from population 2 that we could reject the null hypothesis of similarity.

In other words, we were able to conclude that the difference between the centres of the two distributions was statistically significant .

If, on the other hand, the distributions were a bit less distinct, we would not have been able to make that claim of a significant difference.

We would not have rejected the null hypothesis if evidence indicated the populations were too similar.

Just how different do the two distributions need to be? That criterion is set by the cutoff score , which depends on the significance level , and whether it is a one-tailed or two-tailed hypothesis test .

Concept Practice: Putting hypothesis test elements together

That was a lot of new concepts to take on! As a reward, assuming you enjoy memes, there are a plethora of statistics memes , some of which you may find funny now that you have made it into inferential statistics territory. Welcome to the exclusive club of people who have this rather peculiar sense of humour. Enjoy!

Chapter Summary

In this chapter we examined probability and how it can be used to make inferences about data in the framework of hypothesis testing . We now have a sense of how two populations can be compared and the difference between their means evaluated for statistical significance .

Concept Practice

Return to text.

Return to 4a. Probability and Inferential Statistics

Try interactive Worksheet 4a or download Worksheet 4a

Return to 4b. Hypothesis Testing

Try interactive Worksheet 4b or download Worksheet 4b

in a situation where several different outcomes are possible, the probability of any specific outcome is a fraction or proportion of all possible outcomes

mathematical theorem that proposes the following: as long as we take a decent-sized sample, if we took many samples (10,000) of large enough size (30+) and took the mean each time, the distribution of those means will approach a normal distribution, even if the scores from each sample are not normally distributed

all possible individuals or scores about which we would ideally draw conclusions

a formal decision making procedure often used in inferential statistics

the individuals or scores about which we are actually drawing conclusions

the probability level that we are willing to accept as a risk that the score from our research sample might occur by random chance within the comparison distribution. By convention, it is set to one of three levels: 10%, 5%, or 1%.

critical value that serves as a decision criterion in hypothesis testing

prediction that the population from which the research sample came is different from the comparison population

the prediction that the population from which sample came is not different from the comparison population

a research prediction that the research population mean will be “greater than” or "less than" the comparison population mean

a hypothesis test in which there is only one cutoff sample score on either the lower or the upper end of the comparison distribution

a research prediction that the research population mean will be “different from" the comparison population mean, but allows for the possibility that the research population mean may be either greater than or less than the comparison population mean

a hypothesis test in which there are two cutoff sample scores, one on either end of the comparison distribution

a decision in hypothesis testing that concludes statistical significance because the sample score is more extreme than the cutoff score

the conclusion from a hypothesis test that probability of the observed result occurring randomly within the comparison distribution is less than the significance level

a decision in hypothesis testing that is inconclusive because the sample score is less extreme than the cutoff score

Beginner Statistics for Psychology Copyright © 2021 by Nicole Vittoz is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Normal Distribution in Statistics

By Jim Frost 181 Comments

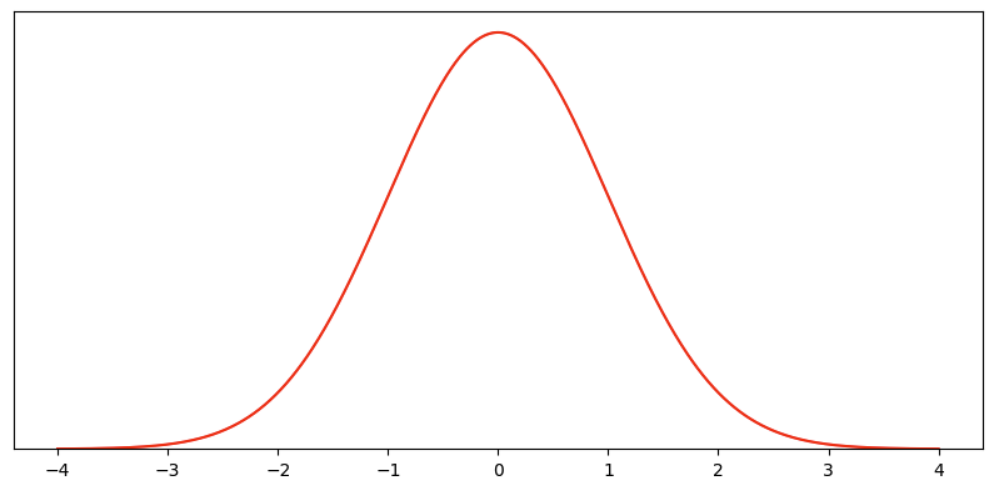

The normal distribution, also known as the Gaussian distribution, is the most important probability distribution in statistics for independent, random variables. Most people recognize its familiar bell-shaped curve in statistical reports.

The normal distribution is a continuous probability distribution that is symmetrical around its mean, most of the observations cluster around the central peak, and the probabilities for values further away from the mean taper off equally in both directions. Extreme values in both tails of the distribution are similarly unlikely. While the normal distribution is symmetrical, not all symmetrical distributions are normal. For example, the Student’s t, Cauchy, and logistic distributions are symmetric.

As with any probability distribution, the normal distribution describes how the values of a variable are distributed. It is the most important probability distribution in statistics because it accurately describes the distribution of values for many natural phenomena. Characteristics that are the sum of many independent processes frequently follow normal distributions. For example, heights, blood pressure, measurement error, and IQ scores follow the normal distribution.

In this blog post, learn how to use the normal distribution, about its parameters, the Empirical Rule, and how to calculate Z-scores to standardize your data and find probabilities.

Example of Normally Distributed Data: Heights

Height data are normally distributed. The distribution in this example fits real data that I collected from 14-year-old girls during a study. The graph below displays the probability distribution function for this normal distribution. Learn more about Probability Density Functions .

As you can see, the distribution of heights follows the typical bell curve pattern for all normal distributions. Most girls are close to the average (1.512 meters). Small differences between an individual’s height and the mean occur more frequently than substantial deviations from the mean. The standard deviation is 0.0741m, which indicates the typical distance that individual girls tend to fall from mean height.

The distribution is symmetric. The number of girls shorter than average equals the number of girls taller than average. In both tails of the distribution, extremely short girls occur as infrequently as extremely tall girls.

Parameters of the Normal Distribution

As with any probability distribution, the parameters for the normal distribution define its shape and probabilities entirely. The normal distribution has two parameters, the mean and standard deviation. The Gaussian distribution does not have just one form. Instead, the shape changes based on the parameter values, as shown in the graphs below.

The mean is the central tendency of the normal distribution. It defines the location of the peak for the bell curve. Most values cluster around the mean. On a graph, changing the mean shifts the entire curve left or right on the X-axis. Statisticians denote the population mean using μ (mu).

μ is the expected value of the normal distribution. Learn more about Expected Values: Definition, Using & Example .

Related posts : Measures of Central Tendency and What is the Mean?

Standard deviation σ

The standard deviation is a measure of variability. It defines the width of the normal distribution. The standard deviation determines how far away from the mean the values tend to fall. It represents the typical distance between the observations and the average. Statisticians denote the population standard deviation using σ (sigma).

On a graph, changing the standard deviation either tightens or spreads out the width of the distribution along the X-axis. Larger standard deviations produce wider distributions.

When you have narrow distributions, the probabilities are higher that values won’t fall far from the mean. As you increase the spread of the bell curve, the likelihood that observations will be further away from the mean also increases.

Related post : Measures of Variability and Standard Deviation

Population parameters versus sample estimates

The mean and standard deviation are parameter values that apply to entire populations. For the Gaussian distribution, statisticians signify the parameters by using the Greek symbol μ (mu) for the population mean and σ (sigma) for the population standard deviation.

Unfortunately, population parameters are usually unknown because it’s generally impossible to measure an entire population. However, you can use random samples to calculate estimates of these parameters. Statisticians represent sample estimates of these parameters using x̅ for the sample mean and s for the sample standard deviation.

Learn more about Parameters vs Statistics: Examples & Differences .

Common Properties for All Forms of the Normal Distribution

Despite the different shapes, all forms of the normal distribution have the following characteristic properties.

- They’re all unimodal , symmetric bell curves. The Gaussian distribution cannot model skewed distributions.

- The mean, median, and mode are all equal.

- Half of the population is less than the mean and half is greater than the mean.

- The Empirical Rule allows you to determine the proportion of values that fall within certain distances from the mean. More on this below!

While the normal distribution is essential in statistics, it is just one of many probability distributions, and it does not fit all populations. To learn how to determine whether the normal distribution provides the best fit to your sample data, read my posts about How to Identify the Distribution of Your Data and Assessing Normality: Histograms vs. Normal Probability Plots .

The uniform distribution also models symmetric, continuous data, but all equal-sized ranges in this distribution have the same probability, which differs from the normal distribution.

If you have continuous data that are skewed, you’ll need to use a different distribution, such as the Weibull , lognormal , exponential , or gamma distribution.

Related post : Skewed Distributions

The Empirical Rule for the Normal Distribution

When you have normally distributed data, the standard deviation becomes particularly valuable. You can use it to determine the proportion of the values that fall within a specified number of standard deviations from the mean. For example, in a normal distribution, 68% of the observations fall within +/- 1 standard deviation from the mean. This property is part of the Empirical Rule, which describes the percentage of the data that fall within specific numbers of standard deviations from the mean for bell-shaped curves.

| 1 | 68% |

| 2 | 95% |

| 3 | 99.7% |

Let’s look at a pizza delivery example. Assume that a pizza restaurant has a mean delivery time of 30 minutes and a standard deviation of 5 minutes. Using the Empirical Rule, we can determine that 68% of the delivery times are between 25-35 minutes (30 +/- 5), 95% are between 20-40 minutes (30 +/- 2*5), and 99.7% are between 15-45 minutes (30 +/-3*5). The chart below illustrates this property graphically.

If your data do not follow the Gaussian distribution and you want an easy method to determine proportions for various standard deviations, use Chebyshev’s Theorem ! That method provides a similar type of result as the Empirical Rule but for non-normal data.

To learn more about this rule, read my post, Empirical Rule: Definition, Formula, and Uses .

Standard Normal Distribution and Standard Scores

As we’ve seen above, the normal distribution has many different shapes depending on the parameter values. However, the standard normal distribution is a special case of the normal distribution where the mean is zero and the standard deviation is 1. This distribution is also known as the Z-distribution.

A value on the standard normal distribution is known as a standard score or a Z-score. A standard score represents the number of standard deviations above or below the mean that a specific observation falls. For example, a standard score of 1.5 indicates that the observation is 1.5 standard deviations above the mean. On the other hand, a negative score represents a value below the average. The mean has a Z-score of 0.

Suppose you weigh an apple and it weighs 110 grams. There’s no way to tell from the weight alone how this apple compares to other apples. However, as you’ll see, after you calculate its Z-score, you know where it falls relative to other apples.

Learn how the Z Test uses Z-scores and the standard normal distribution to determine statistical significance.

Standardization: How to Calculate Z-scores

Standard scores are a great way to understand where a specific observation falls relative to the entire normal distribution. They also allow you to take observations drawn from normally distributed populations that have different means and standard deviations and place them on a standard scale. This standard scale enables you to compare observations that would otherwise be difficult.

This process is called standardization, and it allows you to compare observations and calculate probabilities across different populations. In other words, it permits you to compare apples to oranges. Isn’t statistics great!

To standardize your data, you need to convert the raw measurements into Z-scores.

To calculate the standard score for an observation, take the raw measurement, subtract the mean, and divide by the standard deviation. Mathematically, the formula for that process is the following:

X represents the raw value of the measurement of interest. Mu and sigma represent the parameters for the population from which the observation was drawn.

After you standardize your data, you can place them within the standard normal distribution. In this manner, standardization allows you to compare different types of observations based on where each observation falls within its own distribution.

Example of Using Standard Scores to Make an Apples to Oranges Comparison

Suppose we literally want to compare apples to oranges. Specifically, let’s compare their weights. Imagine that we have an apple that weighs 110 grams and an orange that weighs 100 grams.

If we compare the raw values, it’s easy to see that the apple weighs more than the orange. However, let’s compare their standard scores. To do this, we’ll need to know the properties of the weight distributions for apples and oranges. Assume that the weights of apples and oranges follow a normal distribution with the following parameter values:

| Mean weight grams | 100 | 140 |

| Standard Deviation | 15 | 25 |

Now we’ll calculate the Z-scores:

- Apple = (110-100) / 15 = 0.667

- Orange = (100-140) / 25 = -1.6

The Z-score for the apple (0.667) is positive, which means that our apple weighs more than the average apple. It’s not an extreme value by any means, but it is above average for apples. On the other hand, the orange has fairly negative Z-score (-1.6). It’s pretty far below the mean weight for oranges. I’ve placed these Z-values in the standard normal distribution below.

While our apple weighs more than our orange, we are comparing a somewhat heavier than average apple to a downright puny orange! Using Z-scores, we’ve learned how each fruit fits within its own bell curve and how they compare to each other.

For more detail about z-scores, read my post, Z-score: Definition, Formula, and Uses

Finding Areas Under the Curve of a Normal Distribution

The normal distribution is a probability distribution. As with any probability distribution, the proportion of the area that falls under the curve between two points on a probability distribution plot indicates the probability that a value will fall within that interval. To learn more about this property, read my post about Understanding Probability Distributions .

Typically, I use statistical software to find areas under the curve. However, when you’re working with the normal distribution and convert values to standard scores, you can calculate areas by looking up Z-scores in a Standard Normal Distribution Table.

Because there are an infinite number of different Gaussian distributions, publishers can’t print a table for each distribution. However, you can transform the values from any normal distribution into Z-scores, and then use a table of standard scores to calculate probabilities.

Using a Table of Z-scores

Let’s take the Z-score for our apple (0.667) and use it to determine its weight percentile. A percentile is the proportion of a population that falls below a specific value. Consequently, to determine the percentile, we need to find the area that corresponds to the range of Z-scores that are less than 0.667. In the portion of the table below, the closest Z-score to ours is 0.65, which we’ll use.

Click here for a full Z-table and illustrated instructions for using it !

The trick with these tables is to use the values in conjunction with the properties of the bell curve to calculate the probability that you need. The table value indicates that the area of the curve between -0.65 and +0.65 is 48.43%. However, that’s not what we want to know. We want the area that is less than a Z-score of 0.65.

We know that the two halves of the normal distribution are mirror images of each other. So, if the area for the interval from -0.65 and +0.65 is 48.43%, then the range from 0 to +0.65 must be half of that: 48.43/2 = 24.215%. Additionally, we know that the area for all scores less than zero is half (50%) of the distribution.

Therefore, the area for all scores up to 0.65 = 50% + 24.215% = 74.215%

Our apple is at approximately the 74 th percentile.

Below is a probability distribution plot produced by statistical software that shows the same percentile along with a graphical representation of the corresponding area under the bell curve. The value is slightly different because we used a Z-score of 0.65 from the table while the software uses the more precise value of 0.667.

Related post : Percentiles: Interpretations and Calculations

Other Reasons Why the Normal Distribution is Important

In addition to all of the above, there are several other reasons why the normal distribution is crucial in statistics.

- Some statistical hypothesis tests assume that the data follow a bell curve. However, as I explain in my post about parametric and nonparametric tests , there’s more to it than only whether the data are normally distributed.

- Linear and nonlinear regression both assume that the residuals follow a Gaussian distribution. Learn more in my post about assessing residual plots .

- The central limit theorem states that as the sample size increases, the sampling distribution of the mean follows a normal distribution even when the underlying distribution of the original variable is non-normal.

That was quite a bit about the bell curve! Hopefully, you can understand that it is crucial because of the many ways that analysts use it.

If you’re learning about statistics and like the approach I use in my blog, check out my Introduction to Statistics book! It’s available at Amazon and other retailers.

Share this:

Reader Interactions

January 17, 2023 at 8:55 am

Thanks Jim for the detailed response. much appreciated.. it makes sense.

January 16, 2023 at 7:20 pm

Hi Jim, well, one caveat to your caveat. 🙂 I am assuming that even though we know the general mean is 100, that we do NOT know if there is something inherent about the two schools whereby their mean might not represent the general population, in fact I made it extreme to show that their respective means are probably NOT 100.. So, for the school with an IQ of 60, maybe it is 100, maybe it is 80, maybe it is 60, maybe it is 50, etc. But it seems to me that we could do a probability distribution around each of those in some way. (i.e what if their real mean was 100, what is the sampling distribution, what if the real mean is 80, what is the samplind distribution, etc.) So, I guess ultimately, I am asking two things. 1) what is the real mean for the school with a mean of 60 (in the case of the lower scoring school-intuition tells me it must be higher), but the second question then is, and perhaps the real crux of my question is how would we go about estimating those respective means. To me, this has Bayesian written all over it (the prior is 100, the updated info is 60, etc). But I only know Bayes with probabilities. anyway, I think this is an important question with bayesian thinking needed, and I dont think this subject gets the attention it deserves. I much appreciate your time, Jim. Hopefully a hat trick (3rd response) will finish this up. 🙂 — and hopefully your readers get something from this. Thanks John

January 16, 2023 at 11:08 pm

I explicitly mentioned that as an assumption in my previous comment. The schools need to represent the general population in terms of its IQ score distribution. Yes, it’s possible that the schools represent a different population. In that case, the probabilities don’t apply AND you wouldn’t even know whether the subsequent sample mean was likely to be higher or lower. You’d need to do a bit investigation to determine whether the school represented the general population or some other population. That’s exactly why I mentioned that. And my answer was based on you wanting to use the outside knowledge of the population.

Now, if you don’t want to assume that the general population’s IQ distribution is a normal distribution with a mean of 100 and standard deviation of 15, then you’re back to what I was writing about in my previous comment where you don’t use that information. In short, if you want to know the school’s true mean IQ, you’ll need to treat it as your population. Then draw a good sized random sample from it. Or, if the school is small enough, assess the entire school. As it is, you only have a sample size of 5. That’s not going to give you a precise estimate. You’d check the confidence interval for that estimate to see a range of likely values for the school’s mean.

You could use a Bayesian approach. That’s not my forte. But if you did draw a random sample of 5 and got a mean IQ of 60, that’s so unlikely to occur if the school’s mean is 100 that using a prior of 100 in a Bayesian analysis is questionable. That’s the problem with Bayesian approaches. You need priors, for which you don’t always have solid information. In your example, you’d need to know a lot more about the schools to have reasonable priors.

In this case, it seems likely that the schools mean IQ is not 100. It’s probably lower, but what is it. Hard to say. Seems like you’d need to really investigate the school to see what’s going on. Did you just get a really flukey sample, but the school does represent the general population. Or, does the school represent a different population?

Until you really looked in-depth at the school to get at that information, your best estimate is your sample mean along with the CI to understand its low precision.

January 16, 2023 at 9:54 am

Hi Jim, Thanks for response. I was assuming that we DO KNOW it has a general population mean of 100. I was also thinking in a Bayesian way that knowing the general population mean is 100, that the REAL Mean of the one school is BETWEEN 60 and 100 and the REAL mean of the other school is BETWEEN 100 and 140, much like if you were a baseball scout and you know that the average player is a .260 hitter, and you watch him bat 10 times, and get 8 hits, you would not assume his REAL ability is .800, you would assume it is BETWEEN .260 and .800 and perhaps use a Beta distribution, to conclude his distribution of averages is centered, at.. I don’t know, something like .265… something LARGER than .260. But this seems paradoxical to the idea that if we did get a sample of 5 (or however, many) and got a mean of 60 and in thinking of a confidence interval for that mean of 60, it is equally like that the REAL mean is say 55 as compared to 65.

January 16, 2023 at 6:50 pm

Gotcha! So, yes, using the knowledge outside our dataset, we can draw some additional conclusions.

For one thing, there’s regression to the mean. Usually that applies to one unusual observation being followed by an observation that is likely to be closer to the mean. In this case, we can use the same principle but apply it to samples of N = 5. You’ve got an unusual sample from each school. If you were to draw another random sample of the same size from each school, those samples are likely to be closer to the mean.

There are a few caveats. We’re assuming that we’re drawing random samples and that the schools reflect the general population rather than special populations.

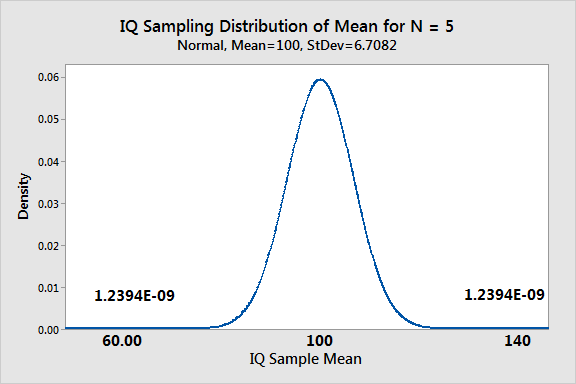

As for getting the probability for N = 5 of the IQ sample mean equaling 60 or 140, we can use the sampling distribution as I mentioned. We need to find the probability of obtaining a random sample 140. The graph shows the sampling distribution below.

The probability for 140 is the same. Total probability getting either condition in one random sample is 0.0000000024788.

As you can see, either probability is quite low! Basically, don’t count on getting either sample mean under these conditions! Those sample means are just far out in the tails of the sampling distribution.

But, if you did get either of those means, what’s the probability that the next random sample of N = 5 will be closer to the true mean?

That probability equals: 1 – 0.0000000024788 = 0.9999999975212

It’s virtually guaranteed in this case that the next random sample of 5 will be closer to the correct mean!

January 13, 2023 at 8:11 am

Hi Jim, Thanks for these posts. I have a question related to the error term in a normal distribution. Let’s assume that we are taking IQs at various high schools. we go to one high school, take 5 IQ’s and the mean is 140. we go to another, take 5 IQS and the mean is 60. We are trying to determine the population mean at each school. Of course, We know that the 140 and 60 are just estimates of the respective high schools, is there some “boundedness” concept (seems intuitive) that would suggest that the real mean at the one school is more likely higher than 60 than lower, and the mean at the other school is more likely lower than 140 than higher. I am thinking of a probability density function of error terms about each of 60 and 140 would illustrate that. Can we confirm this mathematically? hope my question makes sense. Thanks John

January 13, 2023 at 11:54 pm

That’s kind of a complex question! In the real world, we know that IQs are defined as having a general population mean of 100. But let’s pretend we don’t know that.

I’ll assume that you randomly sampled the 5 students at both of the schools.

Going strictly by the data we gathered, it would be hard for us to know whether the overall HS population mean is between the two schools. It’s also possible that the two high schools have different means for reasons unknown to us. So, it’s really hard to draw conclusions. Particularly with only a measly 5 observations at two schools. There’s going to be a large margin of uncertainty with both estimates.

So, we’ll left in a situation where we don’t know what the overall population mean is, we don’t know if the two high schools should have different means or not, and the two estimates we have wide margins of error.

In short, we don’t know much! What we should do over time is build our knowledge in this area. Get large samples from those two schools and other schools. Try to identify reasons why the IQs might be different at various schools. Or find that they should be nearly identical. After we build up our knowledge, we can help that aid our understanding.

But with just 5 observations at two schools and ignoring our real-world knowledge, we couldn’t put much faith in the estimates of 60 and 140 and really not much reason to assume the 60 should be higher and the 140 lower.

Now, if you want to apply real-world knowledge that we do have, yes, we can be reasonably sure that the 60 is too low and the 140 is too high. It is inconceivable that any reasonably sized school would have either mean for the entire school population unless they were schools intended for special students. It is much more likely that those are fluky samples based on the tiny sample size. We can know all that because we know that the population average truly is 100 with a standard deviation of 15. Given that fact, you could look at the sampling distribution of the mean for each school’s size to determine the probability of having such an extreme mean IQ for the entire school.

But it wasn’t clear from your question if you wanted to incorporate that information or not. If you do, then what you need is the sampling distribution of the mean and use that to calculate the probability for each school. It won’t tell you for sure whether the means are too high or too low, but you’ll see how unlikely they are to occur given the known properties of the population, and you could conclude it’s more likely they’re just wrong–too low and too high!

December 2, 2022 at 2:44 pm

Hello. I’m new to this field and have a very basic question. If the average number of engine hours on a small aircraft between oil services is 29.9. And my SD is 14.1, does that mean 68.27% of all values lie between 44.0 (29.9+14.1) and 15.8 (29.9-14.1)?

December 2, 2022 at 5:12 pm

You do need to assume or know that the distribution in question follows a normal distribution. If it does, then, yes, your conclusions are absolutely correct!

In statistics classes, you’ll frequently have questions that state you can assume the data follow a normal distribution or that it’s been determined that they do. In the real world, you’ll need previous research to establish that. Or you might use it as a rough estimate if you’re not positive about normality but pretty sure the distribution is at least roughly normal.

So, there are a few caveats but yes, you’re understanding is correct.

June 27, 2022 at 1:38 pm

Hello, I have a question related to judgments regarding a population and the potential to identify a mixture distribution. I have a dataset which is not continuous – there is a significant gap between two groups of data. Approximately 98% of my data is described by one group and 2% of my data by another group. The CDF of all data looks like a mixture distribution; there is a sharp change in local slopes on either side of the non-continuous data range. I am using NDE methods to detect residual stress levels in pipes. My hypothesis is that discrete stress levels exist as a result of manufacturing methods. That is, you either have typical stress levels or you have atypical stress levels. 1. Can the non-continuous nature of the data suggest a mixture distribution? 2. What test(s) can be performed to establish that the two sub-groups are not statistically compatible?

December 20, 2021 at 10:10 am

Thanks for how to identify what distribution to use. I was condused at first I have understoot in normal, it is continuous and w ecan see on the X -axis the SD line is not clossed on it. In the Poison, it is a discreet thus with a time frame/linit. In Binomial, the outcome expected is post/neg, yes/no, gfalse/true etc. Thus two outcomes.

I can also say that in normal , there is complexity in random variables to be used.

December 21, 2021 at 1:03 am

Hi Evalyne,

I’m not sure that I understand what your question is. Yes, normal distributions require continuous data. However, not all continuous data follow a normal distribution.

Poisson distributions use discrete data–specifically count data. Other types of discrete data do not follow the Poisson distribution. For more information, read about the Poisson distribution .

Binomial distributions model the expected outcomes for binary data. Read more about the binomial distribution .

December 20, 2021 at 10:00 am

Thanks Jim Frost for your resource. I am learning this and has added alot to my knowledge.

November 15, 2021 at 2:44 pm

Thanks for your explanations, they are very helpful

October 5, 2021 at 4:21 am

Interesting. I Need help. Lets say I have 5 columns A B C D E

A follows Poisson Distribution B follows Binomial Distribution C follows Weibull Distribution D follows Negative Binomial Distribution E follows Exponential Distribution

Alright now I know what type of distribution my data follows, Then What should I do next ? How can this help me in exploratory data analysis ,in decision making or in machine learning ?

What if I don’t know what type of distribution my data follows because they all look confusing or similar when plotting it. Is there any equation can help ? is there libraries help me identifies the probability distribution of the data ?

Kindly help

October 7, 2021 at 11:50 pm

Hi Savitur,

There are distribution tests that will help you identify the distribution that best fits your data. To learn how to do that, read my post about How to Identify the Distribution of Your Data .

After you know the distribution, you can use it to make better predictions, estimate probabilities and percentiles, etc.

June 17, 2021 at 2:25 pm